Software development has been fundamentally changing. It's following the data and going to the cloud. What should organizations be aware of to make the most of it?

Software used to be the undisputed No. 1 concern for digital transformation. Not anymore. While software remains key, its importance has been at least equal, if not overshadowed, by data.

The software can not only support data-driven decision making but also become data-driven itself, offering solutions in situations too complex to be dealt with using traditional procedural programming.

At the same time, the move of software development, and off-the-shelf applications, to the cloud creates an interplay with data. As more applications move to the cloud, the data they produce stay there, too. As data increasingly live in the cloud, applications follow.

We have been following the rise of machine learning and data-driven software development, or software 2.0, for a while now. We have talked about the strategic importance of machine learning for cloud providers. But what about cloud users?

Database workloads on the move

Besides the economics of working with the cloud, one of the key concerns for organizations is the lock-in. A good way to deal with both of those is multi-cloud and hybrid cloud strategies: Some data and applications stay on-premise in private clouds, while others move to a multitude of clouds.

Pivotal, the vendor behind Cloud Foundry, recently partnered with Microsoft and Forrester on research evaluating enterprise use of hybrid cloud, including challenges around multiple cloud platforms, and critical capabilities that CIOs and other businesses technology leaders expect from their platforms.

Findings more or less confirm what we knew, or expected. Companies have multi-cloud and hybrid cloud strategies to an overwhelming extent --100 percent and 77 percent, respectively. They believe it drives efficiency, flexibility, and speed. And they are particularly concerned about security, monitoring, and consistency. This is also in line with Cloud Foundry's positioning as a layer to abstract private and public cloud platforms.

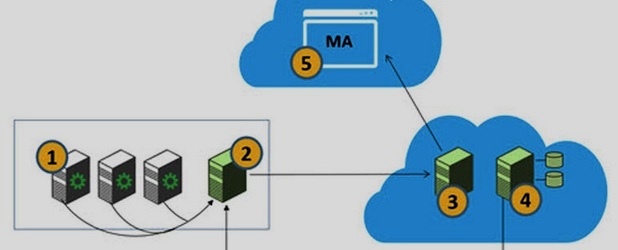

Hybrid cloud and multi-cloud strategies are the new normal, and database workloads are gravitating towards them. (Image: Tom's IT Pro)

But there are some findings regarding application workload migration that are worthy of particular attention. ZDNet discussed those findings with Josh McKenty, Pivotal's VP of Technology, and Ian Andrews, Pivotal's VP of Products.

First off, the report shows a massive drop between current and projected custom database workloads. When asked what their company's custom developed application architectures include today, and how they see this in a year from now, 57 percent of respondents said this includes database workloads today, but only 39 percent said this will be the case next year.

As database workloads are not going away anytime soon, one plausible explanation could be that what respondents actually mean is they will be outsourcing database workloads to managed databases in the cloud. Even though it would make sense interpreted that way, that still makes for an impressive finding. Should we expect a massive migration to cloud databases?

McKenty thinks the definition of "database workloads" seems a bit odd, but his assumption is its things like PL/SQL or other stored procedures -- code that actually runs within the database:

"We've seen this as a primary area for modernization and refactoring work since it's not only not portable to a public cloud environment, it's actually the major vendor lock-in issue when looking to reduce license costs.

The refactoring work here is focused on moving these Stored Procedures outside of the database and into modern software development process including CI and Automated testing."

A layered strategy for data migration

In any case, this seems in line with the tendency toward managed platforms and the data they work with moving to the cloud, and research respondents emphasize application and data migration. But what is the best strategy for data migration?

Could organizations potentially take a layered approach to this -- for example, choosing to host/migrate hot data to the primary operational environment, leaving warm and cold data reside in other environments?

What should users keep in mind to be able to move data between clouds?

When asked what he sees as the benefits and the risks to such an approach, Andrews noted that layers of data replication and migration are the key issue being worked on in many of their most strategic accounts:

"We frequently work with organizations in industries like insurance and banking where many of their core applications still run on or connect to a mainframe. Often the first stage of a modernization project in this architecture is the implementation of a data caching layer and a set of APIs to allow new application development without the direct interface to the mainframe environment. From this point a layered or staged approach to migration becomes possible".

Custom insights or iPaaS?

The ability to work across clouds is a key selling point not just for the platform like Cloud Foundry, but also for iPaaS offerings. An increasing number of platforms, including Hadoop vendors, are positioning themselves as such.

When asked about his opinion on iPaaS and its adoption, McKenty said that they've seen the limited adoption of both Dell Boomi and Mendix within their customer base, and noted that business process automation of various kinds is being applied as a cost-reduction strategy.

He added, however, that the larger arc of digital transformation work remains a focus on new product development and the shift to continuous delivery: "This is naturally focused on custom software development (where the business can establish some differentiation), and not a great fit for the low-code/no-code approach of iPaaS tooling."

If organizations need to have unique insights tailored to them, should they build or buy the platforms to generate them?

We have also noted that there is a tradeoff between off-the-shelf iPaaS and custom development. iPaaS can get organizations up and be running faster than they would be starting from scratch, and in many cases, with better results, too. McKenty's argument is a valid one too, though, to a certain extent.

While it is true that the mechanism for getting insights will not be a differentiation, the data will be, as they are unique to its organization. And we are not sure how many organizations will be able to produce something better than insight platform providers anyway. A best of both worlds approach might be using iPaaS, and customizing where possible.

Avoiding cloud vendor lock-in

With software development changing and data gravity in the cloud increasing, is this something developers and decision makers should be cautious about?

Andrews thinks that for customers starting today there is a good opportunity to jump ahead of the typical infrastructure build out that has become the common starting point for analytics projects. In fact, he says, with most public clouds you can also jump past the basic data preparation (e.g. entity extraction) and algorithm development:

"Leveraging these features of public clouds is almost certainly the right strategy for most organizations. However, while doing this an organization should be keenly aware of their data exit strategy.

If for some reason (e.g. cost) they want to switch to an alternate provider they'll need to consider time and costs of moving raw source data and post-processed 'insight data.' Without conscious thought on this strategy, the organization is almost certainly set up a scenario where they'll find themselves locked-in at some future point."

The conclusion is that while software development is inevitably becoming cloud- and data-driven, organizations should not blindly let themselves go for the convenience of handing everything off to a single vendor. That is a tried and true strategy, and it applies equally in the cloud as much as anywhere.

No comments:

Post a Comment